AI Products / Services

ZIA DV700 Series

AI Products / Services

ZIA DV700 Series

Overview

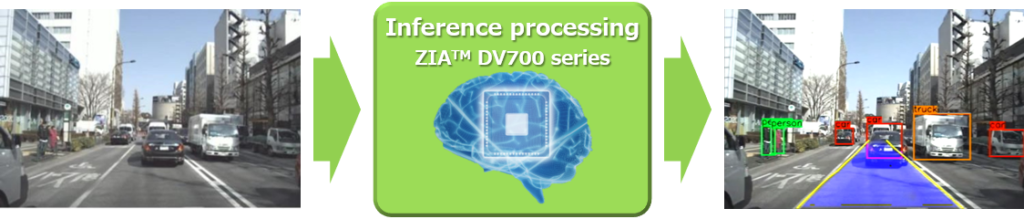

Configurable AI inference processor IP, which can optimize the performance and size and process all data such as images, videos, and sounds on the edge side where real-time property, safety, privacy protection etc. are required.

Features

High precision

DV700 Series whose computing unit supports FP16-precision floating point arithmetic as standard can be used without re-training AI models trained on PCs and cloud servers. Maintaining high inference precision, it is an ideal AI processor IP for AI systems that require high reliability such as autonomous driving and robotics.

Compatible with various DNN models

The DV700 series have the optimal hardware configuration for deep learning inference processing and can perform inference processing using various DNN models such as object detection, semantic segmentation, skeleton estimation, and distance estimation.

Examples of compatible models: MobileNet, Yolo v3, SegNet, PoseNet

Provide development environment (SDK / Tool) that facilitates AI application development

The DV700 series provide development environment (SDK / Tool) accompanying the IP core. The development environment (SDK / Tool) supports the standard AI development framework (Caffe、Keras、TensorFlow), customers can easily perform AI inference processing with the DV700 series by preparing a model that supports the AI development framework.

* Please refer to GitHub for details of development environment (SDK / Tool).

Specifications

Outlined specifications of ZIA DV740

・Up to 1kMAC (2TOPS @1GHz)

・Replaced Processor with optimized controller

・High bandwithd on-chip-RAM (512KB 〜 4MB)

・8bit weight compression

・Framework

・Caffe 1.x、Keras 2.x

・TensorFlow 1.15

・ONNX format support

Related News

-

2020-10-28DMP’s edge AI processor IP core “ZIA DV720” has been adopted for amnimo’s industrial IoT devices

-

2020-07-13DMP releases IP Core “ZIA DV740”

-

2020-07-01Basemark and DMP Partner to Develop Smart Mirrors for commercial vehicles

-

2019-05-21DMP released ZIA DV720 IP Core (in Japanese)

-

2018-09-18DMP started sales of edge AI FPGA Modules “ZIA C2” and “ZIA C3” (in Japanese)